Musings on Running LLMs Locally

In an effort to find something interesting to do on my home server, I’ve been experimenting with running LLMs locally after being inspired by some others on social media

This process is pretty straightforward and has been documented in many other posts (see the references section below), but it merits repeating here (if nothing else then for my own sanity)

Instructions

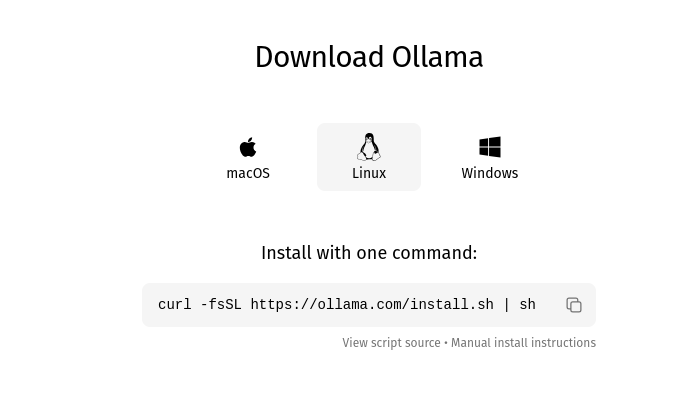

- Install ollama

Who doesn’t love a greate curl piped into sh :p

-

Run

ollama run <model>. A list of models you can download and run out of the box can be found on their model library doc. Since it’s all the rage, I decided to play around withdeepseek-r1 -

From there you can start playing around with the model. Since I had this running on my home server, I made API requests to play around with some queries

Concluding Thoughts

To the surprise of probably no one, my HP Thin Client performed pretty abysmally, but it was a fun little project. I’m planning to upgrade the RAM (from 8GB to 16GB) and SSD (from 16GB to 64GB) and will revisit this after installation